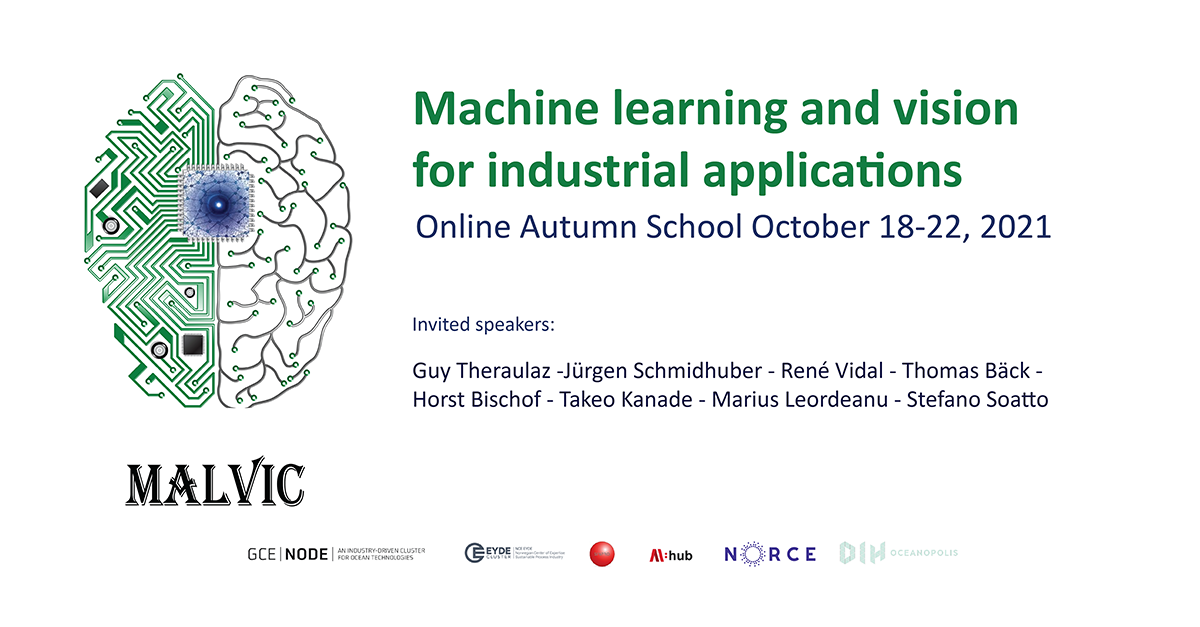

Machine learning and computer vision have the potential to significantly improve the automation and autonomy of many industrial applications (e.g. offshore, automotive, telecommunication, gaming, and multimedia) by enhancing the operational performance, decreasing cost related to manual operations, increasing benefits, minimizing losses, optimizing productivity and improving safety and security.

The goal of this Autumn School MALVIC is to bring together pioneering international scientists in machine learning and computer vision with both academia and practitioners from the industrial fields on a unique setting for the discussion and demonstration of practical, hands-on machine learning and vision research and development. Offshore industrial applications and industrial process scenarios are examples for the autumn school target.